Selftalk

Privacy-first AI chatbot that lets you talk to your journal.

Part 2 - About the Product

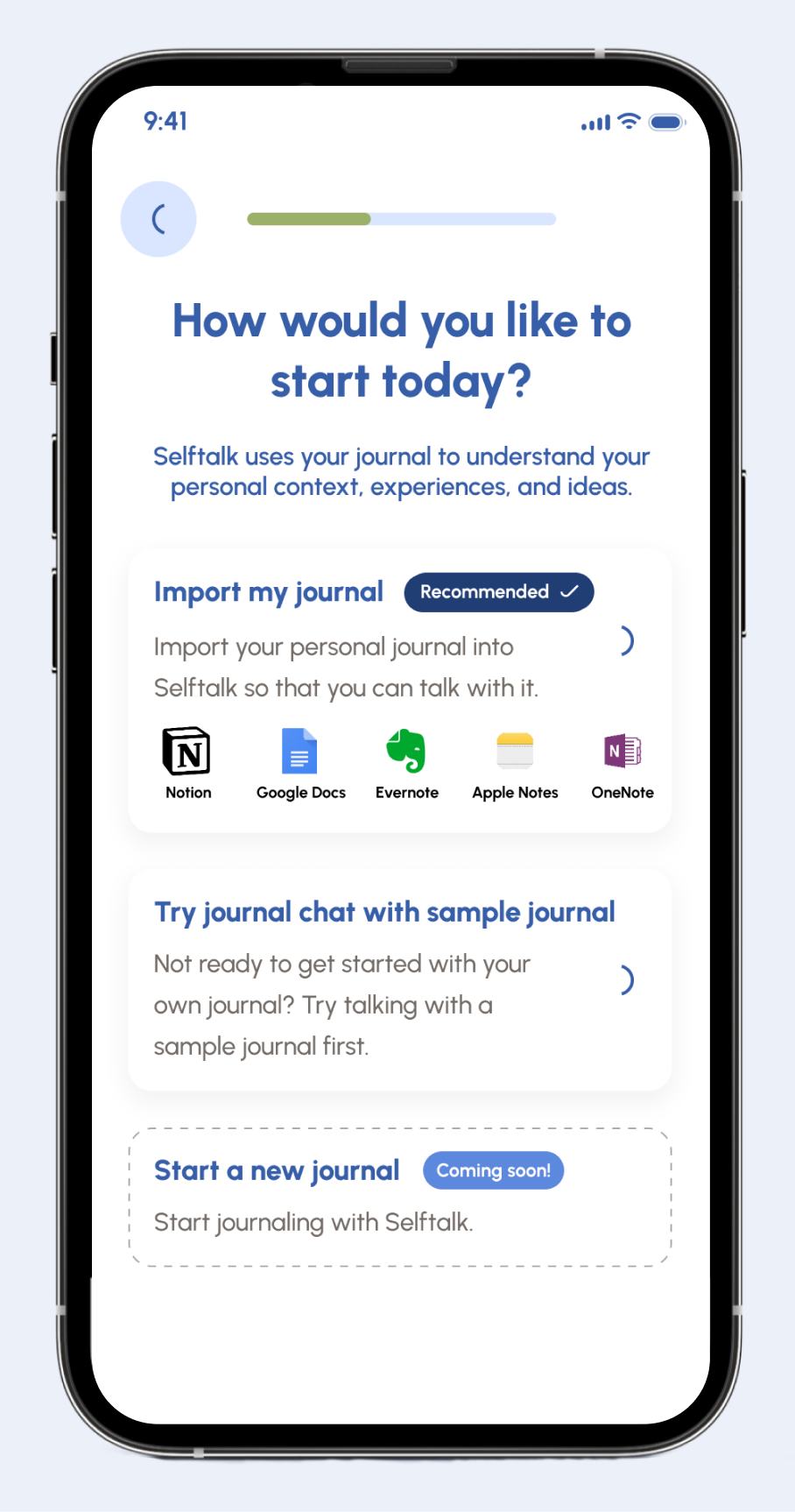

Can I try out Selftalk myself?

Currently it’s still a prototype, which you can try out here or scan the QR code below:

I’ve also taken the first steps towards building it into a real fully-functional product, using my own journal. So far, I’ve done the following:

Digitized my pen-and-paper journal via dictation and stored it locally on my computer

Downloaded and ran a free, local, open-source LLM using LLMWare (free open-source chatbots were critical for this project)

Fed my digitized journal to the LLM to in order “fine-tune” the LLM. Fine-tuning is one way to teach an LLM new information and get it to respond in a certain style.

Asked basic questions to the LLM and got responses that cite my journal

The next step is to finish training and fine-tuning the LLM to respond in the way I want. I’ll post updates here as I make progress– stay tuned!

Where did the idea for Selftalk come from?

I designed Selftalk to show how technology can augment what makes us human: our experiences, relationships, and ideas. Selftalk was inspired by thinking about how AI could augment 2 types of experiences:

Journaling

Keeping a journal can be good for reflection and introspection, with lots of evidence supporting its benefits for mental and physical health. But writing down your thoughts, by itself, only gets you so far. A lot of the value of journaling comes from going back to read what you wrote. I’ve never actually done this part myself - it’s hard enough to find time to write in a journal, let alone to go back and read it. What I need is something that knows my journal word-for-word and can help me quickly find relevant entries.

Getting advice

When facing tough decisions, the best case scenario is to be able to get advice from wise, thoughtful people who have your best interests at heart. But not everyone has access to people like that. Furthermore, another person can never fully grasp your specific context as well as you can. Their advice, shaped by their own experiences, may not be relevant to your specific context. There will always be an irreconcilable gap between your lived experience and what others can understand about it. The best solution may be to develop your own judgment so that you can trust yourself to make decisions that make sense in your context. Of course, this is easier said than done, but technology can help make it easier.

Like many other chatbots, surely (at least one!), Selftalk was also inspired by the film Her. While many science fiction movies about AI tend to focus primarily on the AI and its technology, Her focused intensely on humans and how they were impacted by the technology. One scene, for example, shows everyone on the street absorbed in conversation with their devices and oblivious to the world around them, a depiction relatable to anyone walking around a major city today.

Since Sam Altman, the CEO of OpenAI, tweeted that a recent update to ChatGPT was inspired by Her, some have commented that people building real products based on Her need to watch the film until the end because it’s not a happy ending. Regardless of what you think of the ending, the film shows some more positive moments earlier on. When we first meet Theodore, he’s lonely, doesn’t go out much, and spends most of his time playing video games. After Theodore has been talking to Samantha (the AI) for some time, we see him become a more empathetic and supportive friend. It’s scenes like this, which demonstrate technology’s potential for good, that inspired Selftalk.

Who is Selftalk for?

Selftalk is for anyone who wants to gain insight from past experiences, develop good judgment, and better track the passage of time.

It’s also for anyone who wants to get more value from their own data, which I discuss more in this post.

Should Selftalk replace a trusted friend, family member, or therapist?

No, a highly-skilled human still beats AI at most tasks, and Selftalk is no different. It shouldn’t replace a trusted human advisor who understands your context, has sound judgment, considers various perspectives, and has your best interests at heart. Plus, human communication involves nonverbal cues that AI chatbots cannot currently pick up on. Whether AI will surpass humans in this regard remains uncertain, and I am skeptical.

If you are lucky to know someone that checks all of those boxes, then that person may still be your best option. For those who don’t have access to such a person, Selftalk may be worth a try.

Data privacy and security

With Selftalk, your journal is stored locally on your device, and never leaves your device. Most popular chatbots like ChatGPT, Gemini, and Claude are cloud-based, running on companies’ private servers. Using them means sharing your data with the company under their privacy policy. Local chatbots, on the other hand, run only on your computer and don't share data over the internet.

Skepticism is understandable. We've often sacrificed security for convenience with digital technology, sometimes unknowingly. A physical journal feels like one of the last holdouts. I’ve always preferred pen-and-paper for my own journal not because of any significant risk in digitizing, but because it feels more private and secure.

Convenience and security are a trade-off. More secure products, like those with two-factor authentication, tend to be less convenient due to the extra steps involved. For example, consider different approaches to storing your personal journal:

Digitizing a journal increases convenience with features like text search and entry organization, but also increases the risk that someone else could access it.

On the other hand, storing your journal in the cloud (e.g. Google Drive or iCloud) increases convenience and reliability, enabling access across devices and offering a backup if your computer fails. However, it reduces security, as evidenced by the over 3,000 companies that reported data breaches in 2023, the most ever in one year.

Why use an AI chatbot for this?

AI chatbots can quickly read lots of data in a variety of formats, are highly customizable, and have an intuitive interface (which I discuss in more detail in this post). All of this makes them well-suited for the needs of Selftalk users.

In the context of Selftalk, another strength of the AI may be that it’s not a human. Selftalk assumes that some people would rather share their journal with an app than another person.

I’d encourage everyone, even those that have reservations about AI, to try it out and weigh the pros and cons themselves. AI is constantly evolving and having real impact, and the only way to understand it is to use it.

How do you teach the Selftalk AI to do its job?

Like all AI, Selftalk will only be as good, or bad, as the data it’s trained on. I would focus on 4 key themes in the training data:

To teach ethics and morality: include ethical guidelines, industry standards, and philsophical texts ensure that Selftalk adheres to ethical and moral standards.

To teach clinical psychology: transcripts from therapy and counseling sessions (with consent) and scientific literature on psychology ensure Selftalk knows how to handle sensitive topics.

To teach about everyday human life: accounts of modern life from nonfiction and realistic fiction ensure Selftalk can give practical guidance and interpret situations accurately. These should reflect diverse experiences and identities, showing people navigating various decisions and challenges.

To teach empathy: transcripts of human-to-human and human-to-machine conversations ensure Selftalk knows about dialogue flow, question-asking, and emotional responses. While an AI cannot truly have empathy, the important thing here is to do a good enough impression.

In practice, AI training datasets are much broader than a few categories. But I would make sure to cover the areas above.

How much should we trust AI like Selftalk to do the job?

It’s worth distinguishing between the following:

giving advice

reflecting someone’s own thoughts and ideas back to them

Selftalk focuses on #2, helping users develop their own thinking, rather than offering advice. It believes that self-derived insights are more effective long-term.

In my opinion, #1 is beyond what current AI technology should be trusted to do.

Social AI products, including Selftalk, have unique risks as discussed here. While users bear some responsibility for using products productively, product-builders should aim to mitigate these risks. For Selftalk, this primarily means:

Relying on users' own ideas: Selftalk reflects users' words back to them to spark insights, avoiding the generation of novel advice. Realizations based on personal ideas can be more impactful.

Highlighting Limitations: Users should treat Selftalk as a guide, not an omniscient entity. They must evaluate its guidance in the context of their situation. And the product should encourage users to think this way.

Another benefit: helping you keep track of the passage of time

This one is difficult to know for sure until people have used it for a long time. But I expect that Selftalk may end up having another benefit: helping you keep track of the passage of time.

How we perceive the passage of time depends on our memories of past experiences. After all, when someone says that they “feel old”, it’s often because they remember some experience and then realize how long ago it was. Relatedly, research shows that having more novel experiences, like trying a new activity or visiting a new place, can make time seem to pass more slowly. If that’s true, then maybe being reminded of past novel experiences works too. Maybe being reminded of past experiences more often, and in more detail, can help us avoid the feeling that “time flies” and that life is passing us by.

Imagine looking through a photo album. For years where you took lots of photos, those years take up multiple pages and take longer to flip through. On the other hand, if there is a long period where you only have a few photos, you will fly through it in no time.

Next project:

Top Ones

Or, go back to Selftalk Part 1: Design breakdown